Human-to-Robot Motion Transfer

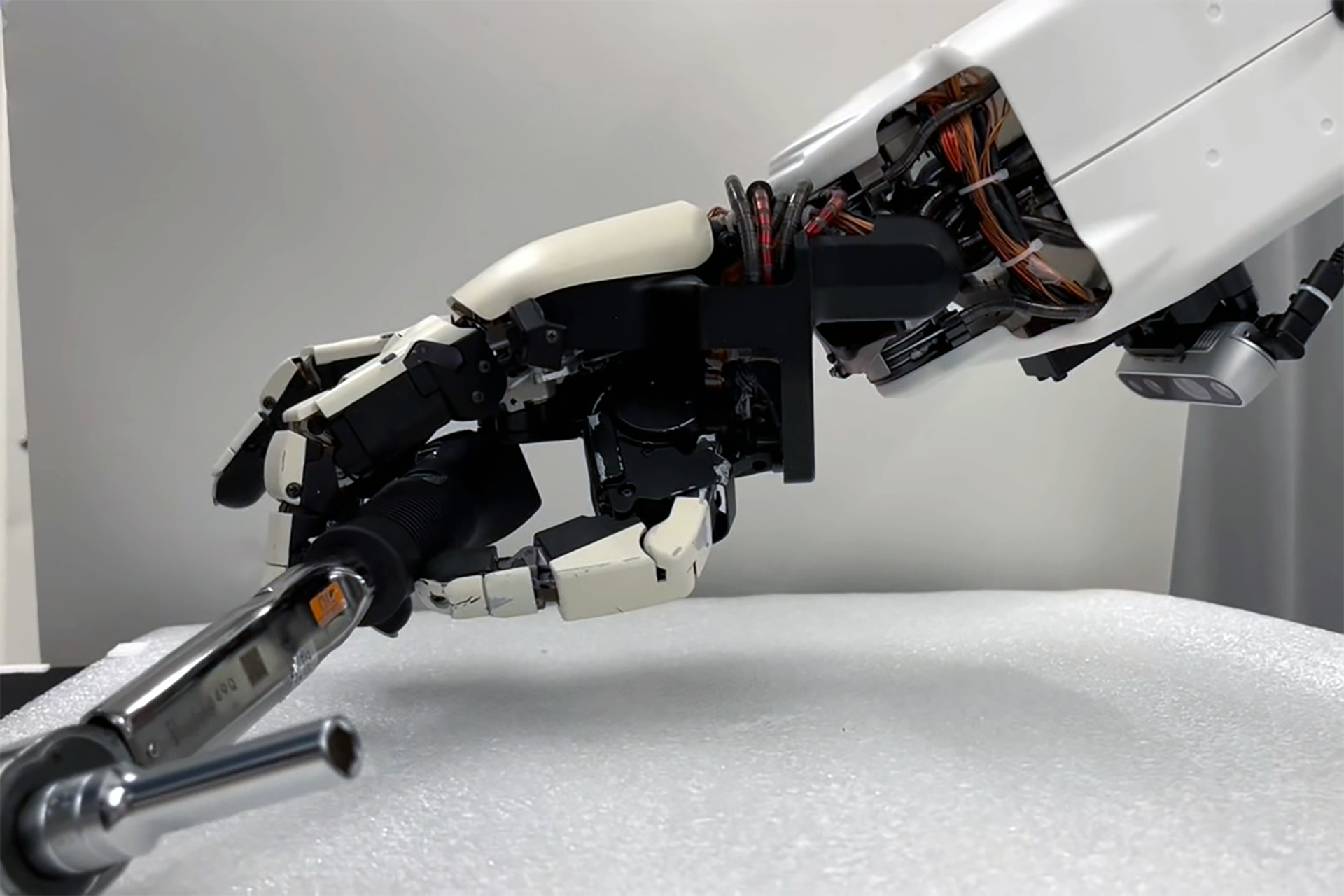

Robots and humans have fundamentally different hand geometries, yet we want operators to transfer nuanced grasping skills involving finger-gaiting directly through Honda’s dexterous robotic hand. Our research addresses this through an adaptive hand re-targeting model powered by residual Gaussian process learning.

Rather than mapping human joint angles one-to-one, the system translates intended coordinated contacts and grasp types – from power grasps to delicate fingertip manipulations – into feasible robot poses that preserve the essence of the human action. This allows the robot to reproduce a broad range of gestures by expanding the reachable workspace, even when its kinematics differ significantly from the operator’s.

The result is more intuitive training, more natural teleoperation, and a foundation for advanced avatar-robot control where human skill can be expressed through robotic precision