HEV-I - Honda Research Institute USA

Introduction

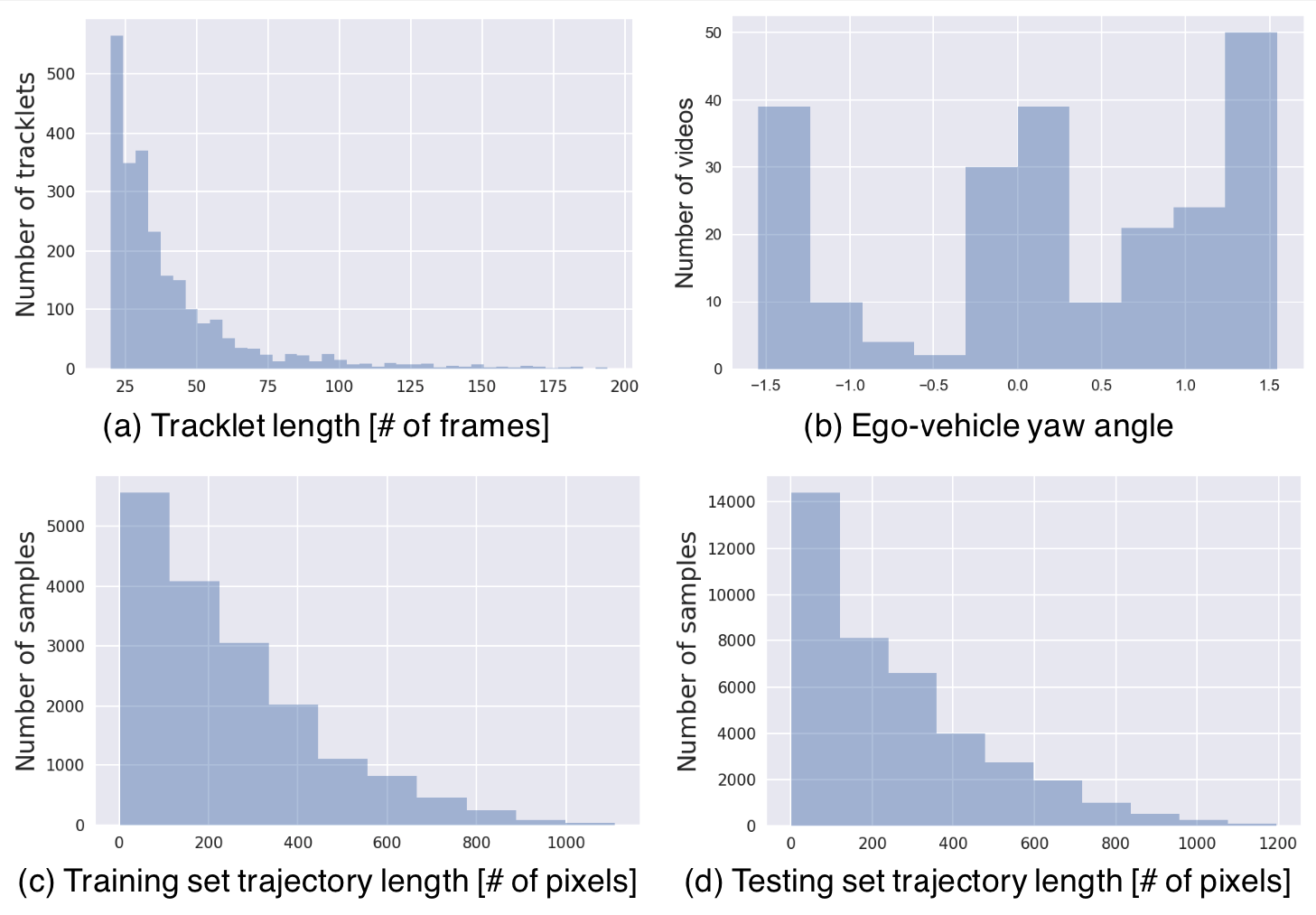

Honda Egocentric View-Intersection Dataset (HEV-I) is introduced to enable research on traffic participants interaction modelling, future object localization, as well as learning driver action in challenging driving scenarios. The dataset includes 230 video clips of real human driving in different intersections from the San Francisco Bay Area, collected using an instrumented vehicle equipped with different sensors including cameras, GPS/IMU, and vehicle states signals.

The HEV-I dataset was firstly used and released with our paper Egocentric Vision-based Future Vehicle Localization for Intelligent Driving Assistance Systems , as it appears in ICRA 2019.

The dataset includes has the following specifications and statistics:

- 230 videos as 1280*640 images in 10 Hz,

- Object classes: car, pedestrian, bicycle, motorcycle, truck, bus, traffic lights, stop sign.

- Ego actions:

- going straight,

- accelerating, braking,

- turning right, turning left,

- yielding (to pedestrians, cyclists or cars),

- stopping (at stop sign or traffic lights)

Dataset

In the current release, the dataset is only being made available to researchers in universities in the United States.

Download the dataset

The dataset is available for non-commercial usage. You must be affiliated with a university and use your university email address to make the request. Use this link to make the download request.

Citation

Bibtex

If you find this dataset useful in your research, please consider citing the following papers:

@inproceedings{yao2019egocentric,

title={Egocentric Vision-based Future Vehicle Localization for Intelligent Driving Assistance Systems},

author={Yao, Yu and Xu, Mingze and Choi, Chiho and Crandall, David J and Atkins, Ella M and Dariush, Behzad},

booktitle = {International Conference on Robotics and Automation}, year = {2019}

}

@article{malla2019nemo, title={Nemo: Future object localization using noisy ego priors}, author={Malla, Srikanth and Dwivedi, Isht and Dariush, Behzad and Choi, Chiho}, booktitle={arXiv preprint arXiv:1909.08150}, year={2019} }