LOKI - Honda Research Institute USA

Introduction

The LOKI Dataset is collected from central Tokyo, Japan using an instrumented Honda SHUTTLE DBA-GK9 vehicle. The dataset contains videos of vehicle and pedestrian motion in dense urban environments.

- Over 28K agents of 8 classes (Pedestrian, Car, Bus, Truck, Van, Motorcyclist, Bicyclist, Other)

- 644 scenarios with average 12.6 seconds length

- Complex, interaction-heavy scenes: 21.6 average agents/scene

- 5 FPS annotated labels with RGB, LiDAR, and 2d/3d bounding boxes

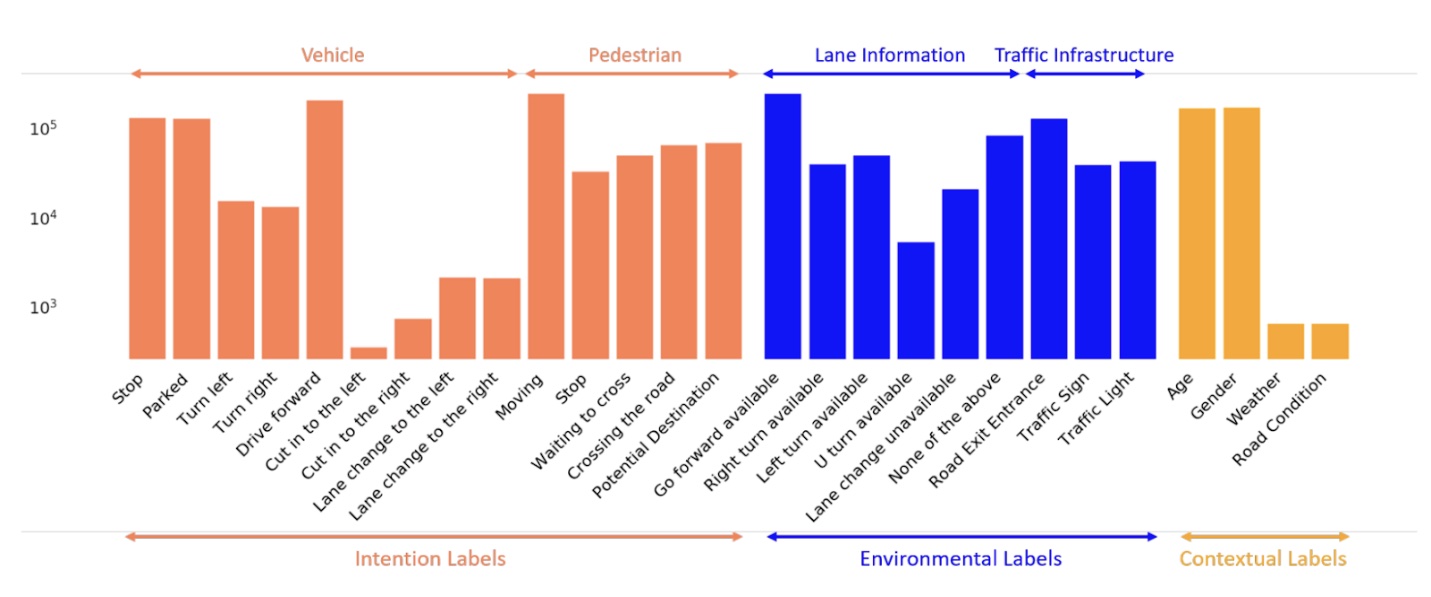

- Labels

- Intention labels (stopped, parked, turn left, etc.)

- Environmental labels (traffic signs, traffic lights, road topology, etc.)

- Contextual labels (age, gender, weather, road condition)

- Sensors

- A color SEKONIX SF332X-10X video camera (30HZ frame rate, 1928 × 1280 resolution and 60° field-ofview (FOV)).

- Four Velodyne VLP-32C 3D LiDARs (10 HZ spinrate, 32 laser beams, range: 200m, vertical FOV 40°).

- A MTi-G-710-GNSS/INS-2A8G4 with output gyros, accelerometers and GPS.

Data Distribution

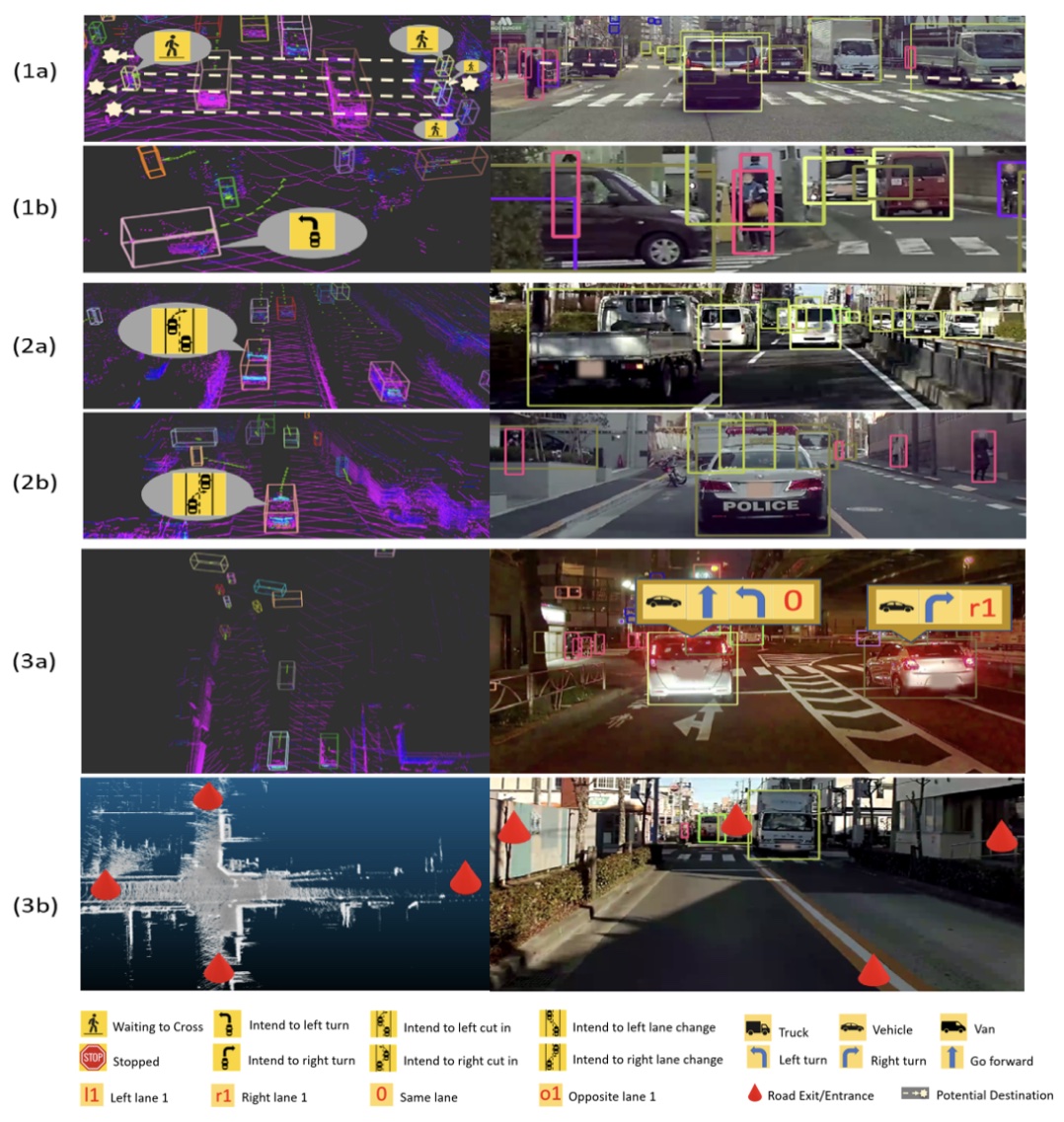

Dataset Visualization

Caption: Visualization of three types of labels: (1a-1b) Intention labels for pedestrian; (2a-2b) Intention labels for vehicle; and (3a-3b) Environmental labels. The left part of each image is from laser scan and the right part is from camera. In (1a), the current status of pedestrian is “Waiting to cross”, and the potential destination shows the intention of pedestrian. In (3a), the blue arrow indicates the possible action of the current lane where the vehicle is on, and the red words present the lane position related to the ego-vehicle.

Data Format

scenario_xxx: sequence of sensor data

|

----odometry:

| |

| ---odom_*.txt: pos_x, pos_y, pos_z, roll, pitch, yaw

|

----label:

| |

| ---label2d_*.json: labels annotated in the camera space (labels, track_id, bbox, not_in_lidar, attributes, potential_destination)

| |

| ---label3d_*.txt: labels annotated in the pointcloud space (labels, track_id, stationary, pos_x, pos_y, pos_z, dim_x, dim_y, dim_z, yaw, vehicle_state, intended_actions, potential_destination,right turn/left turn/go forward/u turn/lane change not possible/none of the above)

|

----pointcloud:

| |

| ---pc_*.ply: 360 deg pointcloud

|

----image:

| |

| ---image_*.png: frontal-view image

|

----map:

|

---map.ply: map pointcloud

Download the dataset

The dataset is available for non-commercial usage. You must be affiliated with a university and use your university email address to make the request. Use this link to make the download request.

Citation

This dataset corresponds to the paper, LOKI: Long Term and Key Intentions for Trajectory Prediction, as it appears in the proceedings of International Conference on Computer Vision (ICCV 2021).

Please cite the following paper if you find this work useful:

@inproceedings{girase2021loki,

title={LOKI: Long Term and Key Intentions for Trajectory Prediction},

author={Girase, Harshayu and Gang, Haiming and Malla, Srikanth and Li, Jiachen and Kanehara, Akira and Mangalam, Karttikeya and Choi, Chiho},

booktitle={Proceedings of the IEEE/CVF International Conference on Computer Vision},

pages={9803--9812},

year={2021}

}