EPOSH - Honda Research Institute USA

Introduction

Most video clips are between 15 − 60 sec long and are recorded around construction zones. For each video about 10 frames are manually selected and annotated. We annotate a total of 5, 630 perspective images.

Using COLMAP, we reconstruct a 3D dense point cloud given a video clip. We then annotate semantic labels of 3D points manually. A total of about 70, 000 BEV image / ground truth pairs are constructed.

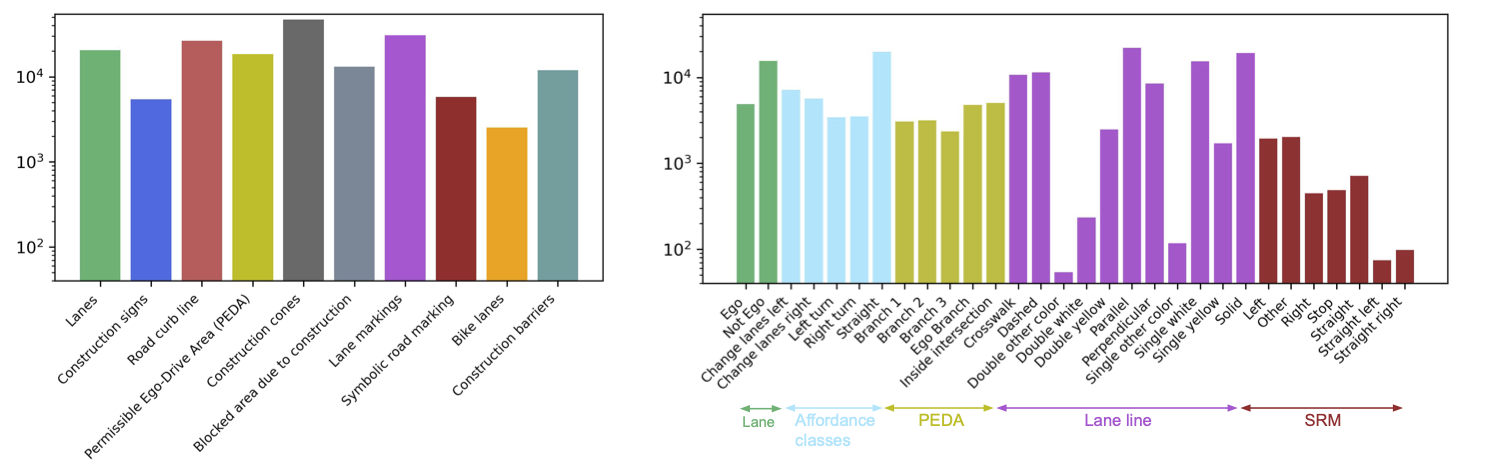

The below showing distribution of classes and corresponding attributes in the perspective EPOSH dataset. The left subplot shows classes and the right subplot shows the corresponding attributes and affordance classes in the dataset.

Dataset Visualization

Data Format

DATA:

pers # perspective annotations

|

video_id

|

img/frame_n.png # images

viz-img # color coded annotations

label-topo/frame_n.png # topology related annotations

label-plan/frame_n.png # planning related annotations

label-lane-markings/frame_n.png # lane marking annotations

label-affordance/frame_n.png # affordance annotations

bev # bev annotations

|

video_id

|

rectified_resized_img/frame_n.png # rectified resized images

rectified_resized_img-viz/frame_n.png # color coded annotations

label-topo/frame_n.png # topology related annotations

label-plan/frame_n.png # planning related annotations

resized_rectified_vid.mp4 # rectified resized video

vid.mp4 # original resized video

vids

|

video_id.mp4 # set of videos

splits

|

bev_train.txt

bev_val.txt

pers_train.txt

pers_val.txt

Please refer to the dataset readme for more details.

Download the dataset

The dataset is available for non-commercial usage. You must be affiliated with a university and use your university email address to make the request. Use this link to make the download request.

Citation

This dataset corresponds to the paper, Bird’s Eye View Segmentation Using Lifted 2D Semantic Features", as it appears in the proceedings of British Machine Vision Conference (BMVC) 2021". In the current release, the data is available for researchers from universities.

Please cite the following paper if you find the dataset useful in your work:

@inproceedings{dwivedi2021bird,

title={Bird’s eye view segmentation using lifted 2D semantic features},

author={Dwivedi, Isht and Malla, Srikanth and Chen, Yi-Ting and Dariush, Behzad},

booktitle={British Machine Vision Conference (BMVC)},

pages={6985--6994},

year={2021}

}